Artificial Intelligence (AI)

Definition of Artificial Intelligence

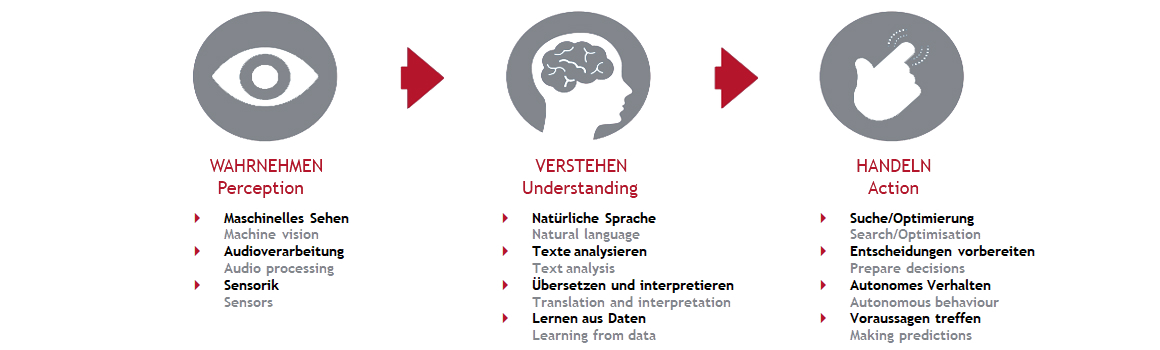

Artificial Intelligence (AI) is the ability of machines to perform cognitive functions which are associated with the human mind (Perception – Think – Learn – Problem solving).

AI based systems analyse their surroundings and act self-governed to achieve predetermined objectives. This includes rule-based knowledge (rule-based AI) generated by experts as well as data generated out of derived statistical models (machine Learning/ learning-based AI). All chains which are characterised by sense – think – act, which can be converted virtually, are known as AI-systems. This include pure software systems, which make decisions in a virtual environment, as well as hardware systems like robots.

Source: AIM AT 2030, www.bmvit.gv.at

AI-Systems based on statistical models

The procedure to train AI-solutions (statistical models) on historical data is called “Supervised Learning”. A precondition for this approach is the availability of labelled data. Every observation must be labelled with a corresponding answer. The algorithm learns what is right, respectively wrong, (therefore “Supervised Learning”) in order to create a forecast model.

Statistical methods for supervised learning are differentiated in classification and regression tasks:

- Regression is the task for modelling continuous target variables. The linear regression is the simplest example of a forecast model.

- Classification is the task for modelling categorial target variables. The binary logistic regression can be mentioned as the simplest example.

Beyond that many other statistical methods are known which are used for modelling like support vector machines, naïve bayes or neural networks.

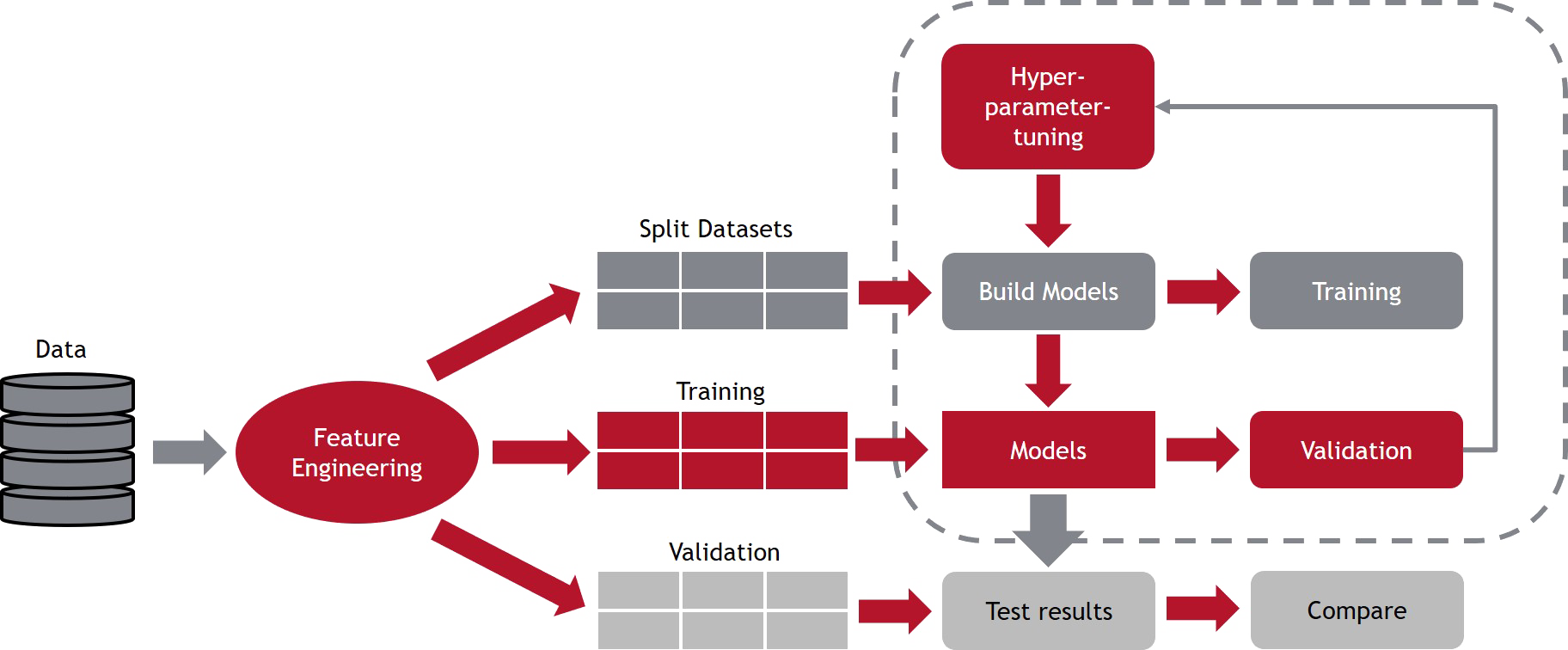

In order to ensure that the trained algorithm achieves the necessary predictive accuracy, needed data which will be used for the modelling are split in training and validation datasets. The algorithm will be trained with the training dataset. Afterwards the validation dataset is used to test the predictive accuracy.

Particular attention must be paid to hyperparameter tuning when training the model. Hyperparameters are parameters which are used for the regulation of the training-algorithm such as artificial neurons or the number of hidden layers in a neuronal network.

Artificial Intelligence made explainable

Each method can make the algorithms arbitrary complex, i.e “Deep Neural Networks” with a lot of neurons and hidden layers. Thus, we focus on explainable AI solutions. Thereby the principal of the easiest method counts. For better understanding of the influencing features (influencing factors) on the target value we use the SHAP approach (Shapley Additive exPlanations). SHAP is a standardised method, which assigns each feature its own Shapley value, which quantifies how this special feature changes the outcome.

AI Academy

In fall 2019 we initiated our AI Academy to mark out these special training needs and to fulfil the rising demands. Our AI Academy make you an expert in the field of AI.

Our training programme include a one-day basic training, a one-day deepening training and the two apprenticeships “Certified Citizen Data Scientist” and “Certified Citizen Data Driven Problem Solving Training”. Both apprenticeships are certified examinations in accordance with ISO17024 as “Six Sigma Green Belt/Black Belt/Master Black Belt” in the range of data analytics.

Obviously, we also offer solutions and AI trainings which are customised for your company. We gladly integrate other sub areas of our training range to your AI trainings.

More information can be found in our new folder “AI & DATA SCIENCE ACADEMY” and on our focus site on LinkedIn “AI – trustworthy and explainable“. As a follower of this page, we will keep you up to date with news within this field.